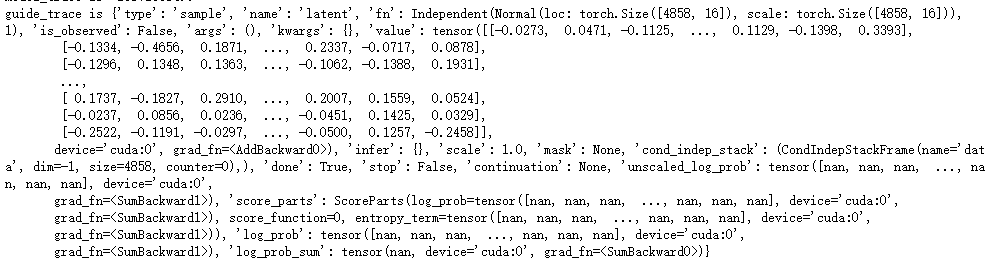

I want to add a liear layer after an encoder in VAE, to get an smaller latent space of a group of data, but the loss returns nan.

Does my idea have some problems? how to avoid the nan loss in the VAE model?

class FC_en(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(2429*32, 64)

self.BN1 = nn.BatchNorm1d(64)

def forward(self, x):

z_loc = self.BN1(self.fc1(x))

return z_loc

class FC_de(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(64,2429*32)

self.BN1 = nn.BatchNorm1d(2429*32)

def forward(self, z):

x = self.BN1(self.fc1(z))

return x

class VAE(nn.Module):

def __init__(self, z_dim=16, hidden_dim=1000, use_cuda=True):

super().__init__()

# create the encoder and decoder networks

self.encoder = Encoder(z_dim, hidden_dim)

self.decoder = Decoder(z_dim, hidden_dim)

self.fc3 = FC_en()

self.fc4 = FC_de()

if use_cuda:

# calling cuda() here will put all the parameters of

# the encoder and decoder networks into gpu memory

self.cuda()

self.use_cuda = use_cuda

self.z_dim = z_dim

# define the model p(x|z)p(z)

def model(self, x):

# register PyTorch module `decoder` with Pyro

pyro.module("decoder", self.decoder)

with pyro.plate("data", x.shape[0]):

# setup hyperparameters for prior p(z)

z_loc = x.new_zeros(torch.Size((x.shape[0], self.z_dim)))

z_scale = x.new_ones(torch.Size((x.shape[0], self.z_dim)))

# sample from prior (value will be sampled by guide when computing the ELBO)

z = pyro.sample("latent", dist.Normal(z_loc, z_scale).to_event(1))

# decode the latent code z

loc_img = self.decoder(z)

loc_img = loc_img.reshape(-1,200*200)

pyro.sample("obs", dist.Bernoulli(loc_img).to_event(1), obs=x.reshape(-1, 200*200))

# define the guide (i.e. variational distribution) q(z|x)

def guide(self, x):

# register PyTorch module `encoder` with Pyro

pyro.module("encoder", self.encoder)

with pyro.plate("data", x.shape[0]):

# use the encoder to get the parameters used to define q(z|x)

z_loc, z_scale = self.encoder(x)

z_sum = torch.cat((z_loc,z_scale),1)

z_sum = z_sum.view(2, 2429*32)

z_sum_z = self.fc3(z_sum)

loc_img = self.fc4(z_sum_z)

loc_img = loc_img.reshape(2429*2, 32)

z_loc = loc_img[:, 0:16]

z_scale = loc_img[:, 16:32]

# sample the latent code z

pyro.sample("latent", dist.Normal(z_loc, z_scale).to_event(1))