Hey guys,

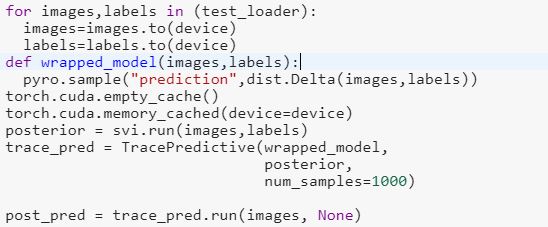

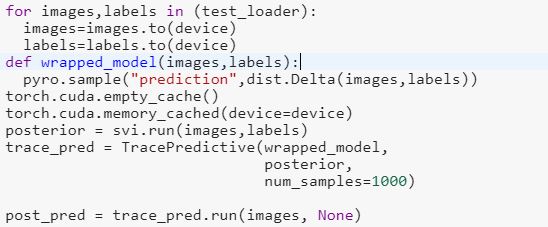

These images are the images showing model evaluation and inference. When the step svi.run is encountered my CPU memory shoots up to 100 percent and in google colab the CUDA is out of memory. I reduced the batch size to as small as one image per batch. But no help.

Also I tried running torch.cuda.empty_cache(). It did not help either

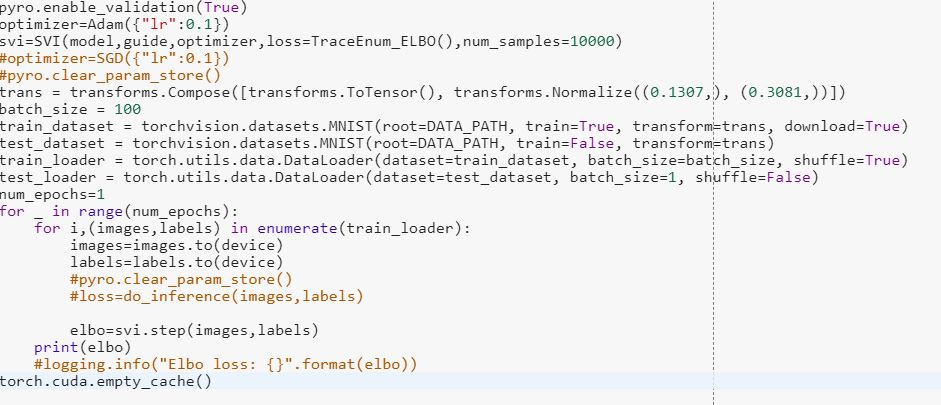

By passing num_samples=10000 to SVI and num_samples=1000 to TracePredictive, you are telling Pyro to store a bag of 10,000 approximate posterior samples of every latent variable in your model and resample 1,000 posterior predictive samples from that bag. If your model is large this will take lots of memory.

that was a good catch. Thanks a lot