Hi! I’m using pyro’s SVI to perform Bayesian inference. I’m initializing using the best seed among 100 seeds, as instructed by one of the nice tutorials, with an initial loss.

seed = 37, initial_loss = 16.00270751953125

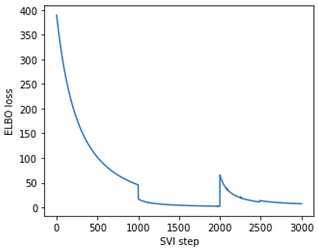

However, when I start training, the curve shows that the loss at the first few steps to be much higher than this initial loss, as can be observed in this plot (starting at around 400):

I was wondering what might have caused this inconsistency in the loss? Are the losses evaluated by the svi.step() and svi.loss() meant to be different? Or what’s wrong in my understanding? I’m confused as I did not encounter this issue with a simpler model. Thanks for any instruction or advice! ![]()

Here is the code for initialization, and training.

def train(num_iterations, losses=[]):

pyro.clear_param_store()

for j in tqdm(range(num_iterations)):

loss = svi.step(data) / N #normalize with the sample size

losses.append(loss)

return losses

def initialize(seed):

global global_guide, svi

pyro.set_rng_seed(seed)

pyro.clear_param_store()

global_guide = pyro.infer.autoguide.AutoDelta(

poutine.block(model, hide=['u', 'z']),

init_loc_fn=init_loc_fn,

)

svi = SVI(model, global_guide, optim, loss=elbo)

return svi.loss(model, global_guide, data) / N

loss, seed = min((initialize(seed), seed) for seed in tqdm(range(100)))

initialize(seed)

print('seed = {}, initial_loss = {}'.format(seed, loss))