When I try

import torch

import pyro.contrib.gp as gp

kernel = gp.kernels.RBF(1)

likelihood = gp.likelihoods.Gaussian()

X = torch.tensor([1., 2., 3., 4., 5.]).double()

gpmodel = gp.models.VariationalGP(X, None, kernel, likelihood)

gpmodel.set_data(X, None)

gpmodel.model()

I invariably get

(tensor([0., 0., 0., 0., 0.], dtype=torch.float64, grad_fn=<AddBackward0>),

tensor([1., 1., 1., 1., 1.], dtype=torch.float64, grad_fn=<SumBackward1>))

Is that the expected result @fehiepsi?

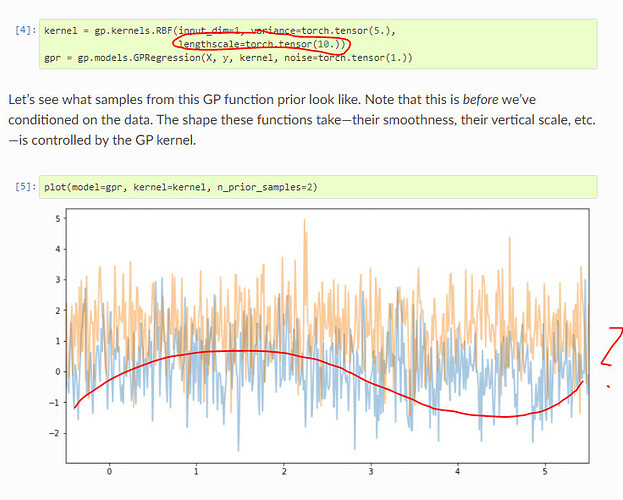

Also, I was looking through the tutorials (Gaussian Processes — Pyro Tutorials 1.8.4 documentation) and noticed that even though the lengthscale is set to 10, the draws from the prior look like white noise:

I would have expected much smoother draws from the prior with that lengthscale.