Hi, I have a HMM with Gaussian latent states. Sequences are not equal length so I use poutine.mask. Furthermore each time point in each sequence has a covariate, which I use in my transition probabilities. The model:

def model(lengths, delays, sequences=None, batch_size=None):

latent_std = pyro.sample('latent_std', dist.Uniform(0.0, 1))

init = pyro.sample('init', dist.Normal(0, 1.5))

increment = pyro.sample('increment', dist.Normal(0, 1.0))

b_delay = pyro.sample('delay', dist.Normal(0, 1.5))

with pyro.plate('sequences', len(lengths), batch_size) as batch:

lengths = lengths[batch]

x = init

for t in pyro.markov(range(lengths.max())):

with pyro.poutine.mask(mask=t < lengths):

x_mean = x + increment + b_delay * delays[batch, t]

x = pyro.sample(f'x_{t}', dist.Normal(x_mean, latent_std))

obs = None if sequences is None else sequences[batch, t]

y = pyro.sample(f'y_{t}', dist.Bernoulli(logits=x), obs=obs)

I train this model on some data and the inferred parameters make sense. I want to do 3 things:

- Sample new sequences

I want to sample from the posterior predictive. As my model has covariates I want to do this sampling wrt to some new covariates. I thought this would work:

lengths = torch.IntTensor([54])

delays = torch.zeros((1, 54))

delays[:, 30] = 5

post_samples = pyro.infer.Predictive(model, guide=guide, num_samples=101)(lengths, delays, None)

However this leads to a vague error that ends with

value 1 |

sequences dist |

value 1 |

x_0 dist 1 |

value 200 |

The model is trained on 200 sequences so I can deduce that the guide also expects 200 new inpus in Predictive. Is there anyway to circumvent this problem?

- Samples next states of sequences

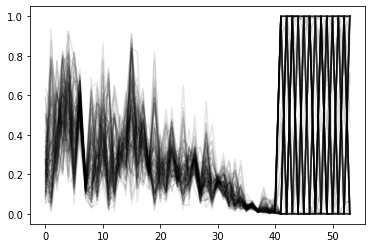

For the sequences that are in my training data, I wish to sample what the trained model thinks the remained of the sequence might look like. UsingPredictiveon the training data works, but if I look at the remainder of the sequences that are shorter than the max sequence, the predictions do not respect the trained parameters and are just noise:

- Inference on new sequences

For new data I want to predict the next time points. This one I imagine is a bit harder. In other threads I understand I have to use amortization, but aside from that is there another way? E.g. can I train on my training data, and then once converged fix the global parameters, and fit the model on the new data to find their latent positions? Using this I can use the solution for problem 2 to do predictions.

Thanks!