Hello Pyro Forum,

I’m developing a Bayesian model in numpyro for analyzing multi-stage decision processes.

Here’s a brief overview of the model:

- Model Structure: The model has two stages, each with its own set of parameters for thresholds and deltas (discriminant parameters). The thresholds for each stage are sampled from a transformed uniform distribution constrained between 0 and 1.

- Issues: The second threshold often converges to 1 during MCMC sampling. When this occurs, the MCMC summary shows high rhat values and very tight (SD of 0.01 or less) posteriors around the wrong parameters.

To debug, I rewrote the sampling statement for thresholds to:

thresholds = numpyro.sample('thresholds', dist.TransformedDistribution(dist.Uniform(0, 0.8).expand([num_stages]), dist.transforms.OrderedTransform()))

In theory, I thought this would constrain thresholds[0] and thresholds[1] to both be no greater than 0.8. However, in practice thresholds[1] is being fit to be greater than 0.8 sometimes. I’ve reproduced the error (fixing the seeds) in a jupyter notebook here. The code includes methods to both generate data and to fit the bayesian model.

I have two questions:

- Is there a reason why the second

threshold is being fit to a point outside the support of the prior? Is it an issue with the MCMC backend, the way I wrote the model, or something else?

- Separately, is there a way to convert the Bayesian model into a generative model, i.e. rather than generating data from a separate chunk of code, is there a way I can directly sample from the Bayesian model I wrote by specifying the latent parameters? I think this may help debugging.

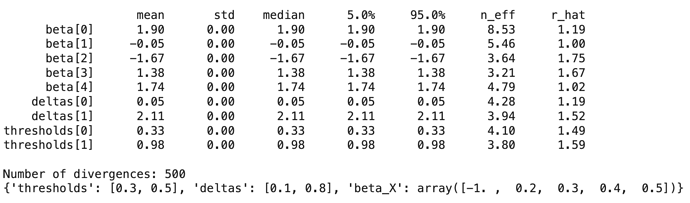

I’ve attached a screenshot of an example convergence issue. The true parameters are at the bottom of the screenshot, and the fitted parameters are in the MCMC summary.

Please let me know if I can clarify anything. Thank you!

You can use Predictive to sample the model with some latent values. The order-transformed domain of [0,0.8] is not [0,0.8]. I guess you need to transform an order vector to [0,0.8] using biject_to(interval(0,0.8)). Something like

TransformedDistribution(Normal(0,1).expand(...), [OrderedTransform(), biject_to(interval(0,0.8))]`

I see, thanks. A couple follow-ups:

-

I see that Predictive takes posterior_samples as an argument, is there a way to sample without conditioning on posterior samples? I.e. I just want to specify all the latent parameters exactly, how can I do that?

-

Is there a reason you set the (pre-transformation) prior to Normal(0,1) instead of Uniform(0, 0.8)? Ideally I’d like the prior to be Unif(0,0.8) but with an ordering constraint. E.g. in stan one could basically do something like

positive_ordered thresholds[num_stages];

and later have thresholds ~ Unif(0, 0.8) (or any other distribution) and the distribution would be constrained to be on the interval specified. But you seem to suggest that sampling from N(0,1) then “bijecting to” (0, 0.8) is preferable. Why?

- Additionally, although the parameters now seem to be contained within the defined interval (0,0.8), they still are converging with 0 SD to the wrong parameters. Do you have any advice on what may be happening and how to debug? I’ve tried pinning other latent parameters to their true values, checking for numerical stability issues, and making sure the parameters are sampled within the bounds I think they should be in.

I think you can do Predictive(model, posterior_samples=your_values, batch_ndim=0).

I guess in Stan code, thresholds does not follow Uniform distribution because positive_ordered is enforced. In NumPyro, TransformedDistribution is a probabilistic distribution transformed from a base distribution. Here if you specify the base distribution is Uniform(0, 0.8), after the transform, for example: x->2x, it will become Uniform(0, 1.6). Does it make sense?

To achieve what Stan code does, I guess you want to do (not sure)

# add .mask(False) to ignore the log probability

positive_ordered = numpyro.sample(..., TransformedDistribution(...).mask(False))

# use Uniform as the log probability

numpyro.factor("positive_ordered_factor", dist.Uniform(0, 0.8).log_prob(positive_ordered))

they still are converging with 0 SD to the wrong parameters

I’m not sure. Probably the above code helps - I think it does that Stan does.

One other question: how can I track the log likelihood given various parameters?

E.g. I’d like to explicitly compare the log likelihood of the data given parameter set 1 vs parameter set 2. Is there an easy way to do so?