Hi everyone!

I’m trying to build a simple Bayesian Regression Model in Pyro - however, when starting the SVI, the AutoDiagonalGuide immediately throws an error (see message below) and the custom guide I wrote sometimes crashes too, sometimes works. The error is caused by guide parameters becoming undefined (NaN), I assume due to exploding gradients? I’m still fairly new to Pyro and Bayesian Statistics in general, so it’s probably just some really stupid mistake on my side. Also, I was wondering: In this setup, I don’t learn the posterior for z, but directly learn the parameters for w instead - is this a problem? Although the ELBO loss decreases, it is usually still super high (e^10), even after thousands of svi steps. I’m just really confused right now, I can’t get it to work.

Thank you for reading and thank you even more for replying!

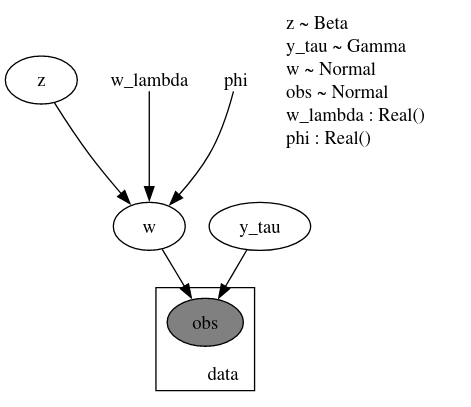

Here is the model in question:

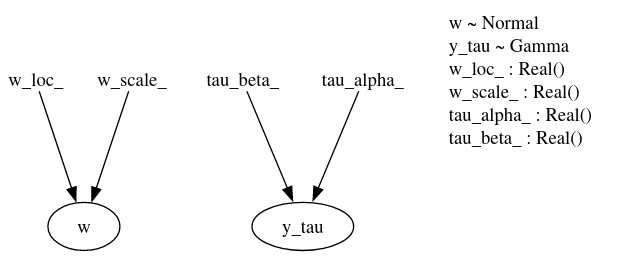

And here is the guide:

Error Message from AutoDiagonalNormal Guide

ValueError: Expected parameter loc (Parameter of shape (1001,)) of distribution Normal(loc: torch.Size([1001]), scale: torch.Size([1001])) to satisfy the constraint Real(), but found invalid values:

Parameter containing:

tensor([-0.5683, -1.5561, -0.1919, …, 0.0349, -0.0553, 0.1487],

requires_grad=True)

Trace Shapes:

Param Sites:

AutoDiagonalNormal.loc 1001

AutoDiagonalNormal.scale 1001

Sample Sites: