Hi All,

I am new to pyro and I am trying to learn it.

I have a dataset with a known structure. I want to model my dataset in Pyro and then do inference on my model.

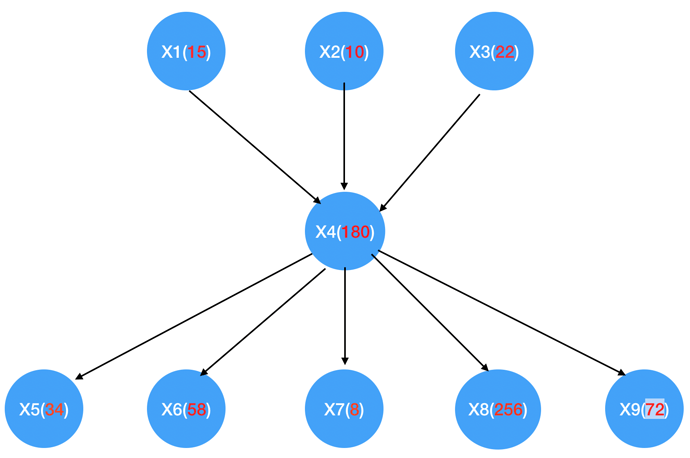

Here is the structure of my model:

All variables are discrete variables and the red numbers on each node indicate total number of states for that node.

Question 1) How can I model such a dataset in Pyro? One way is to use conditional probability tables. Because the nodes have many states, this will result in giant probability tables and thus learning and inference will be very slow. Can I do better than that? how?

Question 2) I want to learn the parameters of the model from my dataset using Pyro. What should I do? Just define one Dirichlet Pyro sample for each node and then try to learn the parameters of that distribution?

Question 3) After the training is done and I computed the parameters, how can I fix several variables and infer the expected distributions over the other nodes? MCMC would be very slow. How can I do better than that?

Thanks in advance