I am trying to work through the examples in Probabilistic Models of Cognition (PMC) using Pyro, but I’m having trouble finding an equivalent to WebPPL’s Infer operator for output of stochastic functions. The first use of Infer in PMC is:

//a complex function, that specifies a complex sampling process:

var foo = function(){gaussian(0,1)*gaussian(0,1)}

//make the marginal distributions on return values explicit:

var d = Infer({method: 'forward', samples: 1000}, foo)

//now we can use d as we would any other distribution:

print( sample(d) )

viz(d)

Here, Infer converts foo to a distribution that can be used like any built-in distribution. In Pyro, the following code appears to work, but I’m not sure that it is equivalent:

# a complex function that specifies a complex sampling process:

def foo():

return pyro.sample("a", pyro.distributions.Normal(0,1)) * pyro.sample("b", pyro.distributions.Normal(0,1))

# make the marginal distributions on return values explicit:

posterior = pyro.infer.Importance(foo, num_samples=1000)

marginal = pyro.infer.EmpiricalMarginal(posterior.run())

plt.hist(marginal.sample([1000]), bins=25)

The second similar example in PMC is this:

var geometric = function (p) {

flip(p) ? 0 : 1 + geometric(p);

};

var g = Infer({method: 'forward', samples: 1000},

function(){return geometric(0.6)})

viz(g)

Following the Pyro code above, I tried to recreate this using:

def geometric(p, t=None):

if t is None:

t = 0

x = pyro.sample("x_{}".format(t), pyro.distributions.Bernoulli(p))

return 0 if x else 1 + geometric(p, t + 1)

# make the marginal distributions on return values explicit:

g = pyro.infer.Importance(geometric, num_samples=1000)

marginal = pyro.infer.EmpiricalMarginal(g.run(0.6))

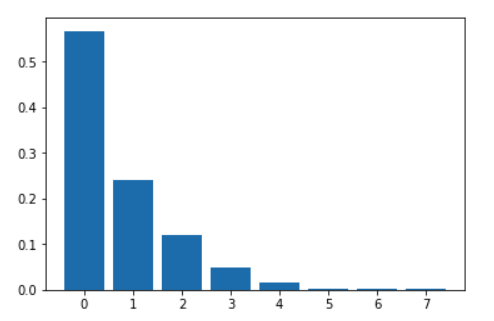

plt.hist(marginal.sample([1000]), bins=25)

However, this raises an error in abstract_infer.py (AttributeError: ‘int’ object has no attribute ‘dtype’) for pyro.infer.EmpiricalMarginal(g.run(0.6))

Changing that line to

marginal = pyro.infer.EmpiricalMarginal(g.run(0.6), sites="x_0")

eliminates the error, but is, of course, not replicating the WebPPL example.

Clearly, I’m having trouble reconciling the conceptual differences between Pyro and WebPPL. I’m also having trouble determining exactly what the Pyro classes and functions expect and return. Reading the source helps a bit, but there are many terms that I don’t see defined and that I assume are defined in various papers. I’ve also found some older examples and blog posts that were helpful, but many of those don’t run in Pyro 0.3.

Aside from the specific issue above (can any stochastic function be turned into a first class Pyro distribution using inference?) are there any documents to explain the general concepts and nomenclature? Church/WebPPL seem very clear in contrast to Pyro.