Hello

As an exercise I would like to implement a Generative Probabilistic Graphics Programs to read

simple captcha graphics as described here:

“Approximate Bayesian Image Interpretation using Generative Probabilistic Graphics Programs”

Vikash K. Mansinghka, Tejas D. Kulkarni, Yura N. Perov, Joshua B. Tenenbaum

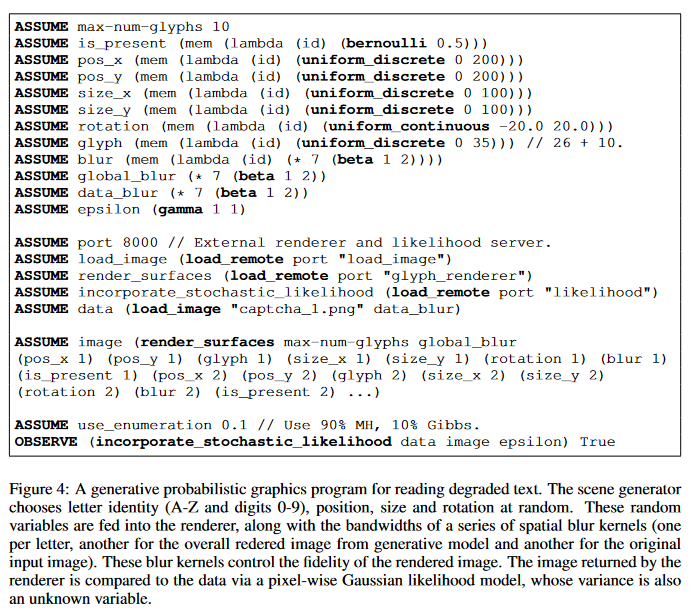

The paper includes some pseudo code for Church.

Based on this I would like to implement it using Numpyro.

My plan so far is to start with Numpyro’s VAE tutorial:

If I understand correctly, the key difference betweeb a VAE and the model proposed in the paper is that in the latter we actually already have a top-down defined model of how we think a given captcha should be constructed. In particular, this is expressed in the latent variables (e.g. dedicated variables for glyph identity and size), while in a VAE the latent space is not necessarily linked directly to such “real-world” variables but rather learnt implicitly. Based on this idea, I thought I could replace the encoder with a handcrafted model including latent variables for glyph-identities, sizes and rotations etc.

Python’s Pillow library could serve as a simple render pipeline.

A likelihood model could then asses the probability of the underlying latent variables, given some observed captcha image. Given, priors for latent variables and the likelihood, using variational inference, we could then try to approximate the posterior and thus the most likely glyph-identities of the observed captcha.

As I am rather new to probabilistic programming and Numpyro, I really would appreciate some help here:

My current questions would be:

-

Does my plan make sense so far?

-

What would be a good likelihood model for comparing the rendered image and the input image?

The paper mentions it uses some pixel wise comparison model. -

Might Pyro, rather than Numpyro be a better framework to do this?

-

Is Numpyro’s tutorial for VAE a good point to start for my endeavor?

-

Do there exist addional tutorials that may help me here?