Hi sorry for the late response , looks like I need to clear a few doubts about Bayesian first I am doing a binary classification based this implementation here since I am using binary classification I don’t need one hot encoding and last layer activation function has to be Sigmoid activation so I changed that part of the code

This is the error I am getting

Blockquote

Traceback (most recent call last):

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/poutine/trace_struct.py”, line 216, in compute_log_prob

log_p = site[“fn”].log_prob(site[“value”], *site[“args”], **site[“kwargs”])

File “/home/pranav/.local/lib/python3.6/site-packages/torch/distributions/one_hot_categorical.py”, line 90, in log_prob

self._validate_sample(value)

File “/home/pranav/.local/lib/python3.6/site-packages/torch/distributions/distribution.py”, line 277, in _validate_sample

raise ValueError(‘The value argument must be within the support’)

ValueError: The value argument must be within the support

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “pranav_baysian.py”, line 186, in

bayesnn.infer_parameters(train_loader, num_epochs=10, lr=0.002)

File “pranav_baysian.py”, line 166, in infer_parameters

loss = svi.step(images, labels, kl_factor=kl_factor)

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/infer/svi.py”, line 99, in step

loss = self.loss_and_grads(self.model, self.guide, *args, **kwargs)

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/infer/trace_elbo.py”, line 125, in loss_and_grads

for model_trace, guide_trace in self._get_traces(model, guide, *args, **kwargs):

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/infer/elbo.py”, line 168, in _get_traces

yield self._get_trace(model, guide, *args, **kwargs)

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/infer/trace_mean_field_elbo.py”, line 67, in _get_trace

model, guide, *args, **kwargs)

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/infer/trace_elbo.py”, line 52, in _get_trace

“flat”, self.max_plate_nesting, model, guide, *args, **kwargs)

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/infer/enum.py”, line 51, in get_importance_trace

model_trace.compute_log_prob()

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/poutine/trace_struct.py”, line 223, in compute_log_prob

traceback)

File “/home/pranav/.local/lib/python3.6/site-packages/six.py”, line 702, in reraise

raise value.with_traceback(tb)

File “/home/pranav/.local/lib/python3.6/site-packages/pyro/poutine/trace_struct.py”, line 216, in compute_log_prob

log_p = site[“fn”].log_prob(site[“value”], *site[“args”], **site[“kwargs”])

File “/home/pranav/.local/lib/python3.6/site-packages/torch/distributions/one_hot_categorical.py”, line 90, in log_prob

self._validate_sample(value)

File “/home/pranav/.local/lib/python3.6/site-packages/torch/distributions/distribution.py”, line 277, in _validate_sample

raise ValueError(‘The value argument must be within the support’)

ValueError: Error while computing log_prob at site ‘label’:

The value argument must be within the support

Trace Shapes:

Param Sites:

Sample Sites:

h1 dist |

value 1 129 |

log_prob |

h2 dist |

value 1 129 |

log_prob |

h3 dist |

value 1 129 |

log_prob |

logits dist |

value 1 1 |

log_prob |

label dist 1 | 1

value | 1

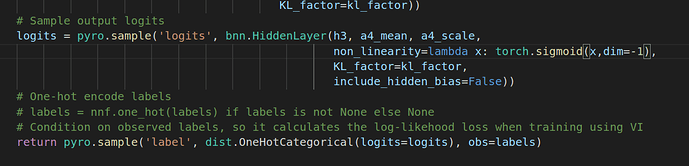

I am unable to understand what to put in the "dist.OneHotCategorical " in this line pyro.sample(‘label’, dist.OneHotCategorical(logits=logits), obs=labels)

Also which loss function should be used since now I have output as a float number and as a lable as 0 or 1 ?