Sorry, there is some bug in my code , above problems are fixed .

Current problem is

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/contrib/forecast/forecaster.py", line 130, in predict

noise_dist = reshape_batch(noise_dist, noise_dist.batch_shape + (1,))

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/functools.py", line 840, in wrapper

return dispatch(args[0].__class__)(*args, **kw)

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/contrib/forecast/util.py", line 278, in _

base_dist = reshape_batch(d.base_dist, base_shape)

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/functools.py", line 840, in wrapper

return dispatch(args[0].__class__)(*args, **kw)

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/contrib/forecast/util.py", line 272, in reshape_batch

raise NotImplementedError("reshape_batch() does not suport {}".format(type(d)))

NotImplementedError: reshape_batch() does not suport <class 'pyro.distributions.torch_distribution.MaskedDistribution'>

go to reshape_batch function

@singledispatch

def reshape_batch(d, batch_shape):

"""

EXPERIMENTAL Given a distribution ``d``, reshape to different batch shape

of same number of elements.

This is typically used to move the the rightmost batch dimension "time" to

an event dimension, while preserving the positions of other batch

dimensions.

:param d: A distribution.

:type d: ~pyro.distributions.Distribution

:param tuple batch_shape: A new batch shape.

:returns: A distribution with the same type but given batch shape.

:rtype: ~pyro.distributions.Distribution

"""

raise NotImplementedError("reshape_batch() does not suport {}".format(type(d)))

I tried to register as below

@reshape_batch.register(dist.MaskedDistribution)

def _(d, batch_shape):

base_dist = reshape_batch(d.base_dist, batch_shape)

return dist.MaskedDistribution(base_dist, d._mask.reshape(base_dist.shape()) )

But got this error when running :

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/contrib/forecast/forecaster.py", line 289, in __init__

elbo._guess_max_plate_nesting(model, guide, (data, covariates), {})

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/infer/elbo.py", line 109, in _guess_max_plate_nesting

model_trace.compute_log_prob()

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/poutine/trace_struct.py", line 221, in compute_log_prob

.format(name, exc_value, shapes)).with_traceback(traceback) from e

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/poutine/trace_struct.py", line 216, in compute_log_prob

log_p = site["fn"].log_prob(site["value"], *site["args"], **site["kwargs"])

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/torch/distributions/independent.py", line 88, in log_prob

log_prob = self.base_dist.log_prob(value)

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/pyro/distributions/torch_distribution.py", line 303, in log_prob

return scale_and_mask(self.base_dist.log_prob(value), mask=self._mask)

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/torch/distributions/normal.py", line 72, in log_prob

self._validate_sample(value)

File "/home/ufo/anaconda3/envs/dl/lib/python3.7/site-packages/torch/distributions/distribution.py", line 253, in _validate_sample

raise ValueError('The value argument must be within the support')

ValueError: Error while computing log_prob at site 'residual':

The value argument must be within the support

It is because of the nan in input data .

Do you mention nan due to this ? So I need a not nan input , with a mask , is this correct ?

Then , I fill nan to zero, trainning is successful .

But how to do with Forecaster ? It doesn’t accept mask parameters .

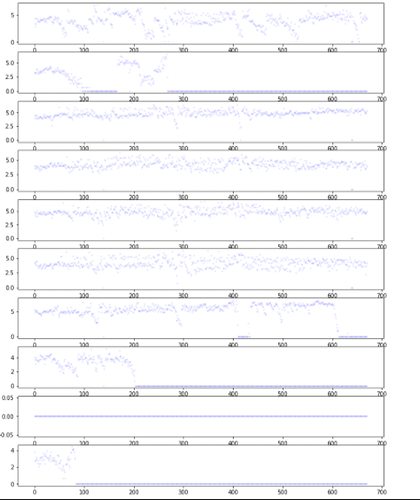

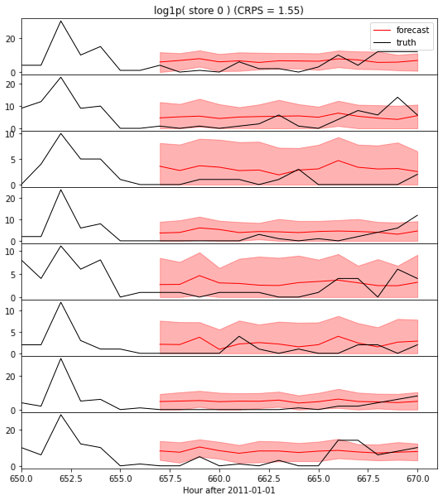

pyro.set_rng_seed(1)

pyro.clear_param_store()

mask = torch.isnan(test_data)

# test_data = torch.Tensor(msc)

test_data = torch.Tensor(np.nan_to_num(msc))

covariates = torch.zeros(test_data.size(-2), 0)

forecaster = Forecaster(Model2(mask=mask[..., T0:T1, :]), test_data[..., T0:T1, :], covariates[T0:T1],

learning_rate=0.1, learning_rate_decay=1, num_steps=501, log_every=50)

samples = forecaster(test_data[..., T0:T1, :], covariates[T1:T2], num_samples=100)

samples

# here tensor([], size=(44, 103, 0, 1))

Actually Forecaster class is strange , __call__ method first argument data seems take no effect in prediction , in tutorials the data length is not equals to covariates length , though it can produce correct result .