Hello,

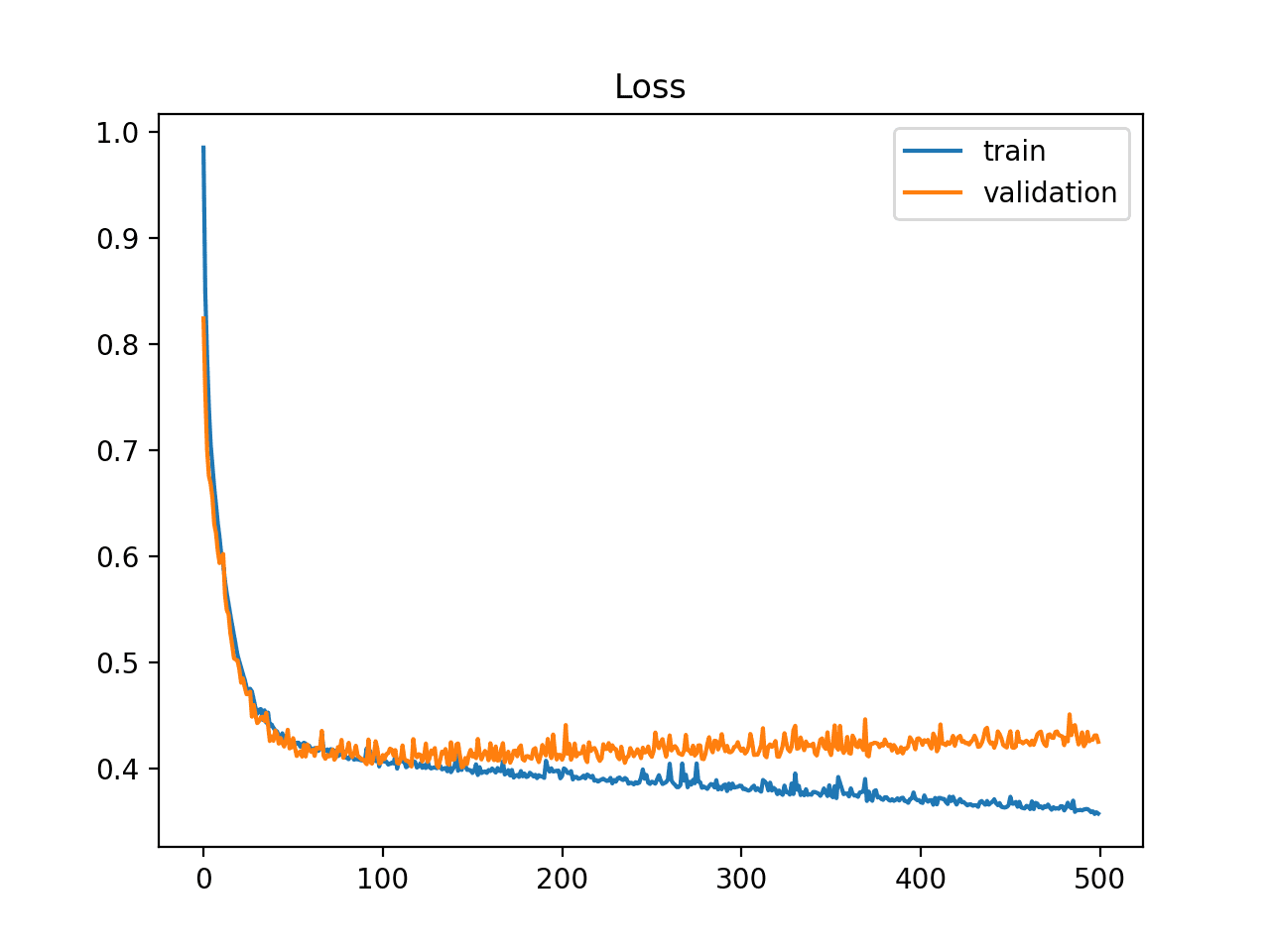

My understanding is that training a model using SVI is very similar to training a neural network. One of the useful outputs when training a neural net is the train vs. validation learning curves. This means evaluating the model at each epoch to get the training loss and validation loss (using in and out-of-sample data, respectively), and get a plot similar to the one below:

I would like to get the same 2 curves for my SVI training. The training loss is straight forward as we only need to do svi_results.losses.

For the validation loss I’m struggling a bit though. When we use the svi.run() method, we can only pass on training data so I’m guessing that the only way to get this loss is by constructing a custom training loop, where probably predictive class is involved to evaluate the loss every epoch. What is the most efficient way of doing this? Is there any implementation out there that I missed to do this?

I didn’t see any resources related to this particular problem so if anyone can point me to any material I’d also appreciate it.

Thank you!