Hi everyone, I am a beginner at probabilistic programming.

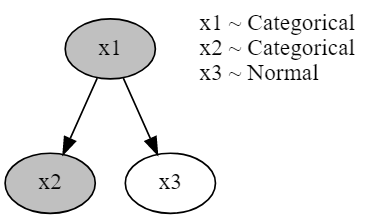

I am currently trying to perform probabilistic inference with a toy Bayesian Network example containing 2 Categorical observed variables X1, X2, and 1 Normal latent variable X3.

e.g. compute P(X1=1 | X2=2)

The Bayesian Network architecture as well as the code is illustrated as follows:

import pyro

import pyro.distributions as dist

from pyro.infer import TraceEnum_ELBO, config_enumerate

@config_enumerate

def model(x1_obs=None, x2_obs=None):

x1_probs = torch.tensor([0.7, 0.2, 0.1])

x2_probs = torch.tensor([[0.5, 0.2, 0.2, 0.1],

[0.6, 0.2, 0.1, 0.1],

[0.7, 0.1, 0.1, 0.1]])

x3_mu = torch.tensor([0., 1., 2.])

x3_sigma = torch.tensor([1., 1., 1.])

x1 = pyro.sample('x1', dist.Categorical(probs=x1_probs), obs=x1_obs)

x2 = pyro.sample('x2', dist.Categorical(probs=x2_probs[x1.long()]), obs=x2_obs)

x3 = pyro.sample('x3', dist.Normal(x3_mu[x1.long()], x3_sigma[x1.long()]), infer={'enumerate':'parallel', 'expand':True, 'num_samples':100})

def guide(**kwargs):

pass

conditional_marginals = TraceEnum_ELBO().compute_marginals(model, guide, x2_obs=torch.tensor(2.))

prob = conditional_marginals['x1'].log_prob(torch.tensor(1.)).exp()

print(prob)

It should be working by simply computing P(X1=1 | X2=2) = P(X2=2 | X1=1)P(X1=1)/P(X2=2) though…

Instead, I got stuck with an error of:

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

/tmp/ipykernel_845721/1298689924.py in

17 pass

18

---> 19 conditional_marginals = TraceEnum_ELBO().compute_marginals(model, guide, x2_obs=torch.tensor(2.))

20 prob = conditional_marginals['x1'].log_prob(torch.tensor(1.)).exp()

21 print(prob)

/anaconda3/lib/python3.9/site-packages/pyro/infer/traceenum_elbo.py in compute_marginals(self, model, guide, *args, **kwargs)

491 "compatible with guide enumeration."

492 )

--> 493 return _compute_marginals(model_trace, guide_trace)

494

495 def sample_posterior(self, model, guide, *args, **kwargs):

/anaconda3/lib/python3.9/site-packages/pyro/infer/traceenum_elbo.py in _compute_marginals(model_trace, guide_trace)

250 while logits.shape[0] == 1:

251 logits = logits.squeeze(0)

--> 252 marginal_dists[name] = _make_dist(site["fn"], logits)

253 return marginal_dists

254

/anaconda3/lib/python3.9/site-packages/pyro/infer/traceenum_elbo.py in _make_dist(dist_, logits)

219 if isinstance(dist_, dist.Bernoulli):

220 logits = logits[..., 1] - logits[..., 0]

--> 221 return type(dist_)(logits=logits)

222

223

/anaconda3/lib/python3.9/site-packages/pyro/distributions/distribution.py in __call__(cls, *args, **kwargs)

22 if result is not None:

23 return result

---> 24 return super().__call__(*args, **kwargs)

25

26

TypeError: __init__() got an unexpected keyword argument 'logits'

Is it due to the improper marginalization of the continuous variable X3 by TraceEnum_ELBO().compute_marginals?

Any help would be greatly appreciated! Thanks!