Hi All!

I am learning Pyro and trying to implement tutorial Edward example in Pyro

My code is below. Could someone help me to define model and guide correctly? I am not getting an error, but the estimation is incorrect, and I am sure my guide/model function is wrong. Thank you!!!

# build a dataset

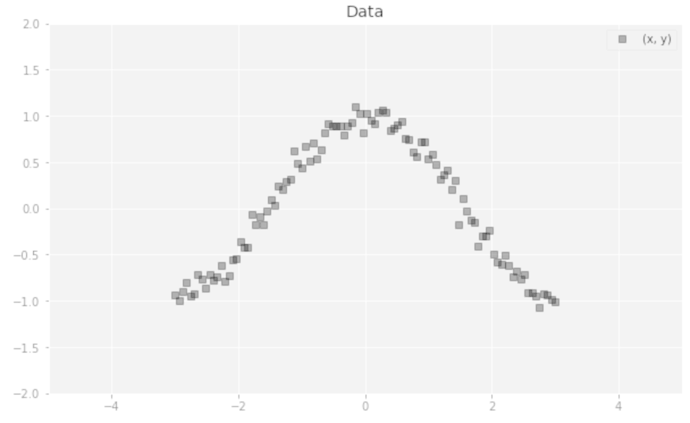

def build_toy_dataset(N=50, noise_std=0.1):

x = np.linspace(-3, 3, num=N)

y = np.cos(x) + np.random.normal(0, noise_std, size=N)

x = x.astype(np.float32).reshape((N, 1))

y = y.astype(np.float32)

return x, y

def neural_network(x, W_0, W_1, b_0, b_1):

h = torch.tanh(torch.matmul(x, W_0) + b_0)

h = torch.matmul(h, W_1) + b_1

return torch.reshape(h, [-1])

pyro.set_rng_seed(42)

N = 100 # number of data points

D = 1 # number of features

x_train, y_train = build_toy_dataset(N)

x_train_tensor = torch.tensor(x_train, dtype=torch.float)

y_train_tensor = torch.tensor(y_train, dtype=torch.float)

params0 = {

'w0_loc': torch.zeros([D, 2]),

'w0_scale' : torch.ones([D, 2]),

'w1_loc' : torch.zeros([2, 1]),

'w1_scale' : torch.ones([2, 1]),

'b0_loc': torch.zeros(2),

'b0_scale': torch.ones(2),

'b1_loc': torch.tensor(1.),

'b1_scale': torch.tensor(1.)

}

def model(data_x, data_y, params):

w0 = pyro.sample("w0", Normal(loc = params["w0_loc"], scale = params["w0_scale"] ))

w1 = pyro.sample("w1", Normal(loc = params["w1_loc"], scale = params["w1_scale"] ))

b0 = pyro.sample("b0", Normal(loc = params["b0_loc"], scale = params["b0_scale"] ))

b1 = pyro.sample("b1", Normal(loc = params["b1_loc"], scale = params["b1_scale"] ))

for i in range(len(data_x)):

y = pyro.sample("obs_{}".format(i),\

Normal(loc = neural_network(data_x[i], w0, w1, b0, b1), scale = 0.1 * torch.ones(1)),\

obs = data_y[i])

def guide(data_x, data_y, params):

qw0_loc = pyro.param("qw0_loc", params['w0_loc'])

qw0_scale = pyro.param("qw0_scale", params['w0_scale'], constraint=constraints.positive)

qw1_loc = pyro.param("qw1_loc", params['w1_loc'])

qw1_scale = pyro.param("qw1_scale", params['w1_scale'], constraint=constraints.positive)

qb0_loc = pyro.param("qb0_loc", params['b0_loc'])

qb0_scale = pyro.param("qb0_scale", params['b0_scale'], constraint=constraints.positive)

qb1_loc = pyro.param("qb1_loc", params['b1_loc'])

qb1_scale = pyro.param("qb1_scale", params['b1_scale'], constraint=constraints.positive)

softplus = torch.nn.Softplus()

w0 = pyro.sample("w0", Normal(loc = qw0_loc, scale = softplus(qw0_scale)) )

w1 = pyro.sample("w1", Normal(loc = qw1_loc, scale = softplus(qw1_scale)) )

b0 = pyro.sample("b0", Normal(loc = qb0_loc, scale = softplus(qb0_scale)) )

b1 = pyro.sample("b1", Normal(loc = qb1_loc, scale = softplus(qb1_scale)) )

for i in range(len(data_x)):

y = pyro.sample("obs_{}".format(i),\

Normal(loc = neural_network(data_x[i], w0, w1, b0, b1), scale = 0.1 * torch.ones(1))

adam_params = {"lr": 0.0005}

optimizer = Adam(adam_params)

svi = SVI(model, guide, optimizer, loss=Trace_ELBO())

n_steps = 500

losses = []

pyro.set_rng_seed(42)

# SVI

pyro.get_param_store().clear()

for step in range(n_steps):

loss = svi.step(x_train_tensor, y_train_tensor, params0)

losses.append( loss/len(x_train_tensor) )

if step % 100 == 0:

print("[iteration %04d] loss: %.4f" % (step + 1, loss/len(x_train_tensor)))