I’m seeing a similar behavior

- Full usage of all cores when num_chains=1

- Very slow sampling if num_chains > 1

- I see this issue on my Ubuntu machine

- I do not see this issue on my mac book pro (even when running in an Ubuntu docker image)

Ubuntu

System: Ubuntu 18.04, CPU: i7 (6 cores 12 threads)

All data fits into RAM so this is not an I/O issue

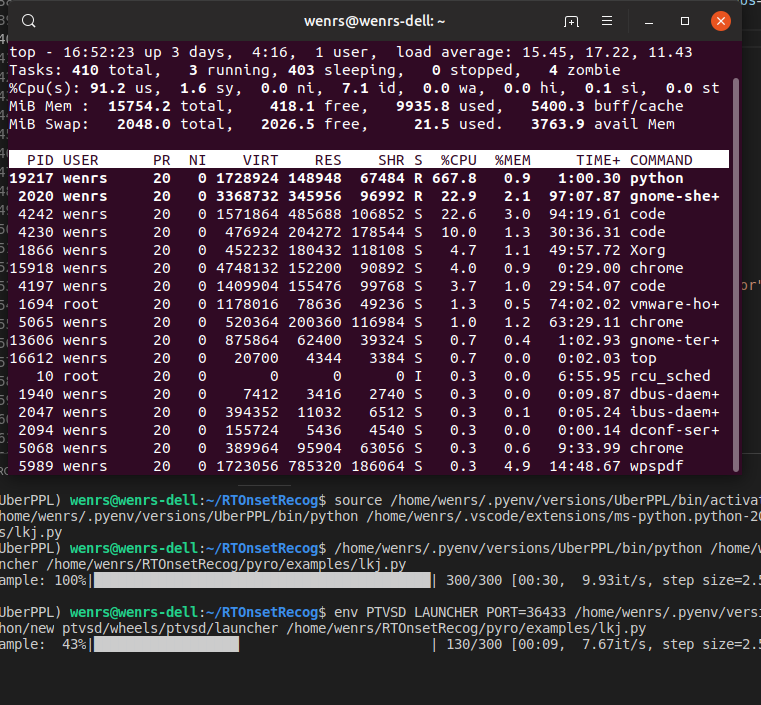

Setting the num_chains=1

mcmc = MCMC(kernel, num_samples=10_000, warmup_steps=0, num_chains=1)

I see that almost all 12 threads on my machine are in use at 100%

Running under in this condition runs at ~200 it/s

Sample: 100%|█████████████████████████████████████| 10000/10000 [00:50, 198.61it/s, step size=2.50e-01, acc. prob=0.009]

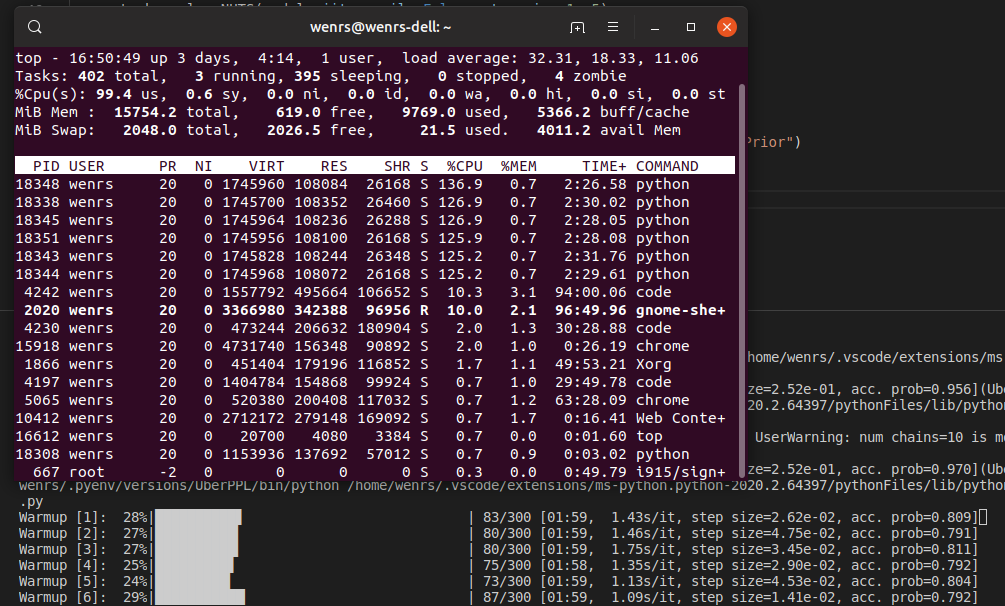

Setting the num_chains=2

If I increase num_chains to 2, I see full usage of all 12 threads.

and my sampling speed plummets (note the number is now in s/it not it/s)

Warmup [1]: 0%| | 8/10000 [00:48, 7.62s/it, step size=3.12e-02, acc. prob=0.993]

Warmup [2]: 0%| | 3/10000 [00:18, 5.66s/it, step size=1.56e-02, acc. prob=0.999]

OSX

Oddly, If I run this on my MBP, I see no slowdown when going to more chains

System: Mac OSX, CPU i7 quad-core

All data fits in RAM

One chain

Sample: 0%|▏ | 33/10000 [00:13, 2.28it/s, step size=3.12e-02, acc. prob=0.896]

Two chains

Warmup [1]: 2%|▋ | 192/10000 [00:55, 1.13it/s, step size=6.25e-02, acc. prob=0.274]

Warmup [2]: 12%|████▎ | 1235/10000 [00:55, 27.41it/s, step size=1.25e-01, acc. prob=0.048]

To make matters stranger, if I start an Ubuntu docker container on my Mac, the numbers are the same as on OSX. (Running this same Ubuntu image on my ubuntu machine results in the same performance hit as at the start of the post)

Environment is consistent between the two machines

Package Version

---------------- -------

numpy 1.18.4

pyro-api 0.1.2

pyro-ppl 1.3.1

torch 1.5.0

torchvision 0.6.0