Hello Pyro community!

Thank you so much for very nice documentation and examples, they are very helpful!

I am sorry in advance if the answer to my question is obvious, I am a bit confused with some of the features of Pyro.

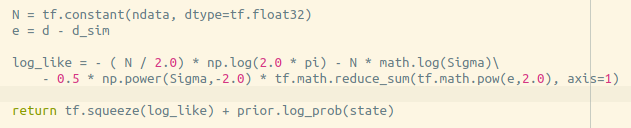

I am trying to use the NeuTra routine of Hoffman et al. 2019 to approximate a posterior distribution p(theta|d). In the TensorFlow implementation I simply calculated the posterior log probability for theta samples drawn out of the variational density q(theta) as follows:

but in Pyro I got a bit confused when I went over the “Example: Neural MCMC with NeuTraReparam”.

If I understand it correctly, in the pyro example a distribution object is provided (analytical solution) and from which you can sample and automatically calculate the log probability.

In my case I don’t know how to represent my posterior as an object (maybe there is a way?) to sample from, but only provide the log posterior probability for specific theta that is drawn out of q(theta) by calculating the log likelihood p(d|theta) and multiply it by the prior of theta p(theta).

How can I implement a model for this case?

Thanks in advance!