Dear Pyro Community,

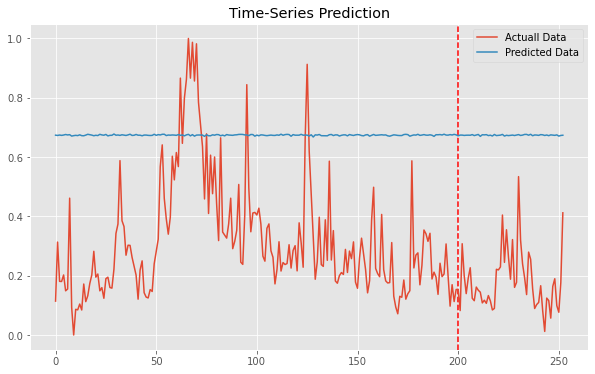

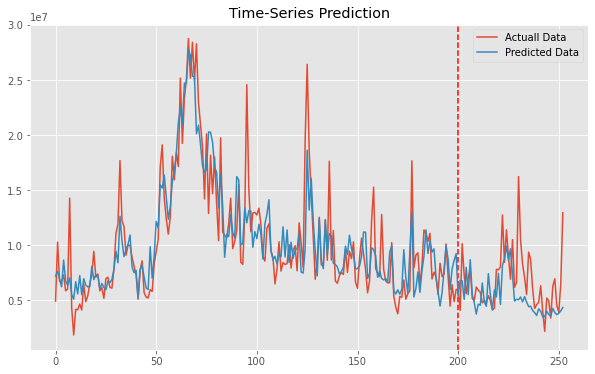

I tried to model a Bayesian LSTM model in pyro. At this stage it is only one LSTM leyer and two linear leyer to connecte to the output. I did the same example for pytorch lstm ato make sure that the code run uscessfully with good result. Now when I tried to chnage the code to pyro for bayesian estimations and giving priors to weights for both LSTM modul and Linear Modul, I see that after trainig the model, my prediction is almots Stationary and at does not follow any trend!, howvere the traditional LSTM in pytorch give at least goo trend. I will appreciate if anyonw give me insight how i can find the issue and how make the model better for Bayesian LSTM prediction. This would help other resercahers asw elll as I investigate a lot but coulkd bot find previous solved example for Bayeisna LSTM yet.

Here is my code:

class Model(PyroModule):

def init(self, input_size=1, num_classes=1, hidden_size=3, num_layers=1, prior_scale=50.0):

super().init()

self.num_classes = num_classes

self.num_layers = num_layers

self.input_size = input_size

self.hidden_size = hidden_size

self.activation = nn.ReLU() # or nn.ReLU()

# Correctly initialize the LSTM layer with bidirectional=False

self.lstm = PyroModule[nn.LSTM](input_size, hidden_size, num_layers, batch_first=True, bidirectional=False)

self.linear = PyroModule[nn.Linear](hidden_size, 128) # Adjusted for unidirectional LSTM

self.fc = PyroModule[nn.Linear](128, num_classes)

# Initialize weights and biases for each layer

# Input to hidden layer

self.lstm.weight_ih_l0 = PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size, input_size]).to_event(2))

self.lstm.bias_ih_l0 = PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size]).to_event(1))

# Hidden to hidden layer

self.lstm.weight_hh_l0 = PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size, hidden_size]).to_event(2))

self.lstm.bias_hh_l0= PyroSample(dist.Normal(0., prior_scale).expand([4*hidden_size]).to_event(1))

self.linear.weight = PyroSample(dist.Normal(0., prior_scale).expand([128,hidden_size]).to_event(2))

self.linear.bias = PyroSample(dist.Normal(0., prior_scale).expand([128]).to_event(1))

self.fc.weight = PyroSample(dist.Normal(0., prior_scale).expand([num_classes, 128]).to_event(2))

self.fc.bias = PyroSample(dist.Normal(0., prior_scale).expand([num_classes]).to_event(1))

def forward(self, x, y=None,noise_shape = 0.5):

h_0 = Variable(torch.zeros(self.num_layers, x.size(0), self.hidden_size)) #hidden state

c_0 = Variable(torch.zeros(self.num_layers, x.size(0), self.hidden_size)) #internal state

output, (hn, cn) = self.lstm(x, (h_0, c_0)) #lstm with input, hidden, and internal state

hn = hn.view(-1, self.hidden_size) #reshaping the data for Dense layer next

out = self.activation(hn)

out = self.linear(out)

out = self.activation(out)

mu = self.fc(out)

sigma = pyro.sample("sigma", dist.Gamma(noise_shape, 1)) # infer the response noise

with pyro.plate("data", x.shape[0]):

obs = pyro.sample("obs", dist.Normal(mu, sigma * sigma), obs=y)

return mu

and this the setps for MCMC training

Initialize the Bayesian LSTM model

input_size = X_test_tensors_final.shape[2] # Number of features

hidden_size = 2 # Number of features in hidden state

num_layers = 1 # Number of stacked LSTM layers

model = Model(input_size = input_size, num_classes =1, hidden_size = hidden_size, num_layers = num_layers, prior_scale=50.0)

Set up the MCMC sampler

nuts_kernel = NUTS(model)

mcmc = MCMC(nuts_kernel, num_samples=50, warmup_steps=50)

Run the MCMC sampler

mcmc.run(X_train_tensors_final, y_train_tensors)

here is also the plot i get for some data for this model training:

and this the result that i got for Pytorch LSTM

i used open data here to check the models