Hi all, I was trying to use the VGP combined with MultiClass likelihood for a classification problem in my project. First I tried it on a toy dataset, but the results are bad, here is the code:

#!/usr/bin/env python

import torch

import torch.nn.functional as F

import numpy as np

import matplotlib.pyplot as plt

import time

import pyro

import pyro.contrib.gp as gp

import pyro.distributions as dist

from pyro.infer import SVI, Trace_ELBO

from pyro.optim import Adam

from sklearn.metrics import accuracy_score

from sklearn.datasets import make_classification, make_blobs

from scipy import stats

import os

smoke_test = ('CI' in os.environ) # ignore; used to check code integrity in the Pyro repo

pyro.enable_validation(True) # can help with debugging

pyro.set_rng_seed(0)

if __name__ == '__main__':

# from sklearn.model_selection import train_test_split

# from sklearn import datasets

#Create dataset

N = 200

N_TRAIN = int(0.80 * N)

N_FEATURES = 7

num_class = 3

#X, y = make_classification(n_samples = 200, n_features=N_FEATURES, n_redundant=0, n_informative=2,

# n_clusters_per_class=2)

X, y = make_blobs(n_samples=200, n_features=N_FEATURES, centers=num_class)

plt.scatter(X[:, 0], X[:, 1], marker='o', c=y,

s=25, edgecolor='k')

plt.show()

###################

###################

X_train = X[:N_TRAIN,:]

y_train =y[:N_TRAIN]

X_test = X[N_TRAIN:,:]

y_test =y[N_TRAIN:]

X_train = torch.tensor(X_train,dtype= torch.float32)

y_train = torch.tensor(y_train, dtype=torch.float32)

X_test = torch.tensor(X_test,dtype= torch.float32)

y_test = torch.tensor(y_test, dtype=torch.float32)

#encode one-hot vector

y_train_label = y[:N_TRAIN]

y_test_label = y[N_TRAIN:]

y_train_enc = torch.zeros(num_class, y_train.shape[0]).double()

y_test_enc = torch.zeros(num_class, y_test.shape[0]).double()

y_train_enc.scatter_(0, torch.LongTensor(y_train_label).view(1, -1), 1)

y_test_enc.scatter_(0, torch.LongTensor(y_test_label).view(1, -1), 1)

# Choose kernel and likelihood for multiclassification

# set the dim of lenthscale to input_dim to use the "isotropic" version

kernel = gp.kernels.RBF(input_dim = N_FEATURES, variance = torch.tensor(1.),lengthscale = torch.ones(N_FEATURES))

likelihood = gp.likelihoods.MultiClass(3)

gpc = gp.models.VariationalGP(X_train,y_train_enc,kernel=kernel,jitter = 1e-03, likelihood=likelihood,whiten =

True)

gpc.optimize()

# Infer the posterior via sampling

f_loc, f_var = gpc(X_train, full_cov=False)

y_train_results = []

for i in range(0, 1000):

y_train_results.append(gpc.likelihood(f_loc, f_var).numpy())

y_train_results = np.array(y_train_results)

y_train_results = stats.mode(y_train_results, axis=0)[0]

y_train_results = y_train_results.squeeze(0)

train_acc = accuracy_score(y_train_results[:],y_train)

f_loc, f_var = gpc(X_test, full_cov=False)

y_test_results = []

for i in range(0, 1000):

y_test_results.append(gpc.likelihood(f_loc, f_var).numpy())

y_test_results = np.array(y_test_results)

y_test_results = stats.mode(y_test_results, axis=0)[0]

y_test_results = y_test_results.squeeze(0)

test_acc = accuracy_score(y_test_results,y_test)

print("Train accuracy: %.3f, Test accuracy: %.3f" %(train_acc,test_acc))

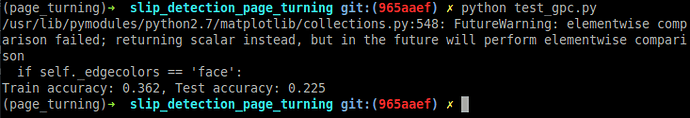

The results are bad:

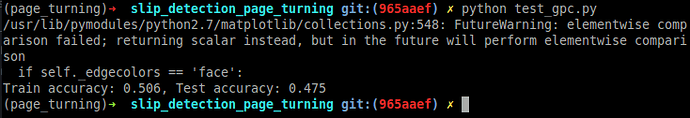

And even when I tried the same setting for a binary classification problem, by setting num_class = 2, results are also bad:

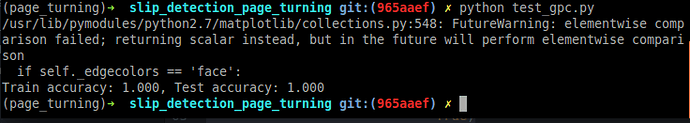

However, when I changed it to a simpler binary binary classification problem by using gp.likelihoods.Binary():

...

num_class = 2

#X, y = make_classification(n_samples = 200, n_features=N_FEATURES, n_redundant=0, n_informative=2,

# n_clusters_per_class=2)

X, y = make_blobs(n_samples=200, n_features=N_FEATURES, centers=num_class)

...

likelihood = gp.likelihoods.Binary()

# Here for gp.likelihoods.Binary(), no need to use one-hot vector

gpc = gp.models.VariationalGP(X_train,y_train,kernel=kernel,jitter = 1e-03, likelihood=likelihood,whiten =

True)

...

The optimization results seem reasonably good:

So it looks like the problem is not in the hyper-parameter initialization for the kernel or the optimization parameter setting for the SVI, I am wondering if it’s related to the Multiclass likelihood. Though I checked the implementation in pyro/pyro/contrib/gp/likelihoods/multi_class.py, it seems correct according to section 3.5 of the GPML book.

Did anyone try similar problems ? Advices appreciated.