Hey guys,

This is more of a general Bayesian Statistics question.

Here is a reference to the 8-schools example in Numpyro:

Numpyro Eight Schools

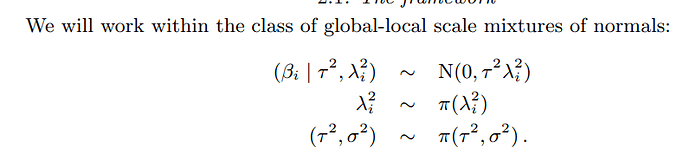

In the bayesian statistics literature it seems to have become popular to use priors that look like this:

The idea is that having a different lam_i for each predictor will allow the model to automatically remove the predictors that don’t have predictive power. Here, in the 8-schools example, we could have a different lam_i for each school-indicator variable, which seemingly would improve the model as opposed to what is currently done in the example, which just has a single lam that applies to all the schools.

This idea of Automatic Relevance Detection is also used in this example:

https://num.pyro.ai/en/stable/examples/sparse_regression.html

My question is, would using automatic relevance detection improve the 8-schools model?