I’m creating a simple HMM with gaussian emission. Modified from example from: Example: Hidden Markov Models — Pyro Tutorials 1.8.4 documentation

I got two issues:

sample function always return [0, 1, 2] after first epoch.

parameter p_mu merely change

def model(observations, num_state):

with poutine.mask(mask = True):

p_mu = pyro.sample("p_mu",

dist.Normal(0, 10000).expand([num_state]).to_event(1))

p_tau = pyro.sample("p_tau",

dist.Exponential(1).expand([num_state]).to_event(1))

p_transition = pyro.sample("p_transition",

dist.Dirichlet((1 / num_state) * torch.ones(num_state, num_state)).to_event(1))

current_state = 0

counter = 0

for t in pyro.markov(range(len(observations))):

counter += 1

current_state = pyro.sample("x_{}".format(t),

dist.Categorical(Vindex(p_transition)[current_state]),

infer = {"enumerate" : "parallel"})

print("counter: " + str(counter))

print(current_state)

pyro.sample("y_{}".format(t),

dist.Normal(Vindex(p_mu)[...,current_state], Vindex(p_tau)[...,current_state]),

obs = observations[t])

I use TraceTMC_ELBO

counter: 198

tensor(0)

counter: 199

tensor(1)

counter: 200

tensor(0)

counter: 1

tensor([[0],

[1],

[2]])

counter: 2

tensor([[[0]],

[[1]],

[[2]]])

counter: 3

tensor([[0],

[1],

[2]])

counter: 4

tensor([[[0]],

[[1]],

[[2]]])

fritzo

August 24, 2022, 1:13pm

2

The first issue is due to enumeration. Actually the [[0],[1],[2]] shapes are returned every iteration. What you’re seeing first is a trial run of the guide to determine shapes. For explanation see the Tensor shapes tutorial .

So is it proper for my model? Since I expect to only sample one number at a time.

fritzo

August 30, 2022, 6:09pm

4

Yes, these tensor shapes are expected and proper. Each of Pyro’s inference algorithms evaluates your model in a different nonstandard interpretation . Trace_ELBO is straightforward and simply evaluates with differentiable PyTorch tensors; TraceEnum_ELBO evaluates with specially shaped tensors that enumerate discrete values over all possible values, then later marginalize out those values; TraceTMC_ELBO evaluates with batches of samples at each site. These nonstandard evaluations enable inference.

Thanks for replying. I changed the ELBO for my HMM into Trace_ELBO, and something very wired happened.

Here’s my current model.

def model(observations, num_state):

assert not torch._C._get_tracing_state()

with poutine.mask(mask = True):

p_transition = pyro.sample("p_transition",

dist.Dirichlet((1 / num_state) * torch.ones(num_state, num_state)).to_event(1))

p_mu = pyro.param(name = "p_mu",

init_tensor = torch.randn(num_state),

constraint = constraints.real)

p_tau = pyro.param(name = "p_tau",

init_tensor = torch.ones(num_state),

constraint = constraints.positive)

current_state = 0

with pyro.plate('obs_plate', len(obs)):

for t in pyro.markov(range(len(observations))):

current_state = pyro.sample("x_{}".format(t),

dist.Categorical(Vindex(p_transition)[current_state, :]),

infer = {"enumerate" : "parallel"})

pyro.sample("y_{}".format(t),

dist.Normal(Vindex(p_mu)[current_state], Vindex(p_tau)[current_state]),

obs = observations[t])

I fit it by

device = torch.device("cuda:0")

obs = torch.tensor(obs)

obs = obs.to(device)

torch.set_default_tensor_type("torch.cuda.FloatTensor")

guide = AutoDelta(poutine.block(model, expose_fn = lambda msg : msg["name"].startswith("p_")))

Elbo = Trace_ELBO

elbo = Elbo(max_plate_nesting = 1)

optim = Adam({"lr": 0.001})

svi = SVI(model, guide, optim, elbo)

while 1:

loss = svi.step(observations = obs, num_state = 3)

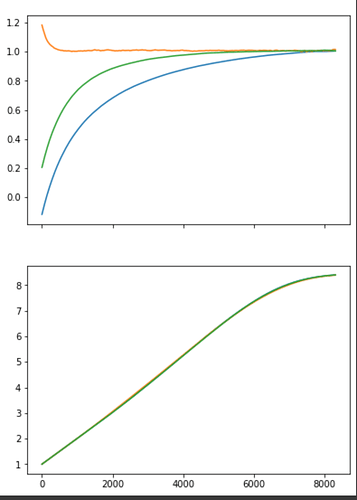

The true value of my parameter mu are [-10, 1, 10]

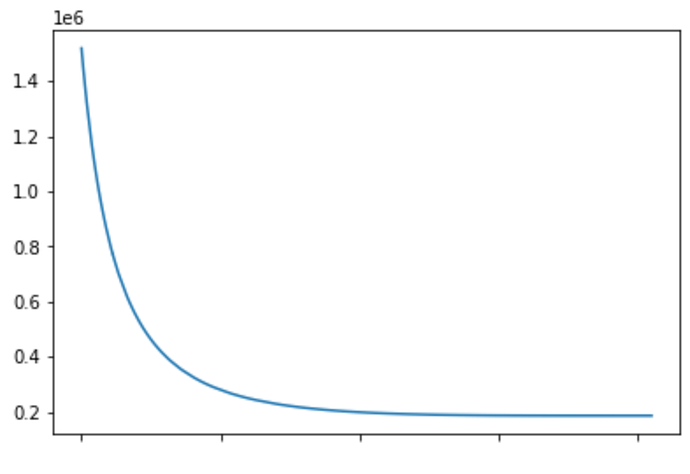

But as the model trains, the ELBO is

mu and sigma looks like:

Do you have any thoughts about how I could fix the problem?