Hi, I’m running a bayesian nnet on a GPU and I see no speedup gains comparing to a CPU execution (~160s/iteration). I would expect it to take either a shorter (hopefully) or a longer time, but not the same…

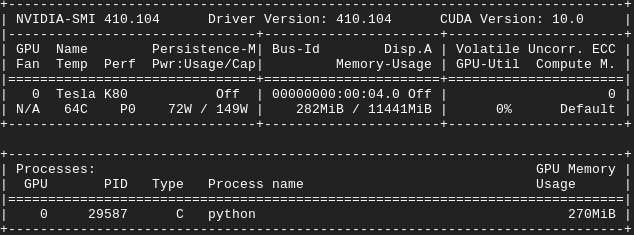

Am I missing something in my code or is there anything else I need to setup (I’m seeing some memory alloc in the nvidia-smi) ?

My training data has ~4 million samples and I’m using batches of 512. Moreover, I’m using an autoguide and the following code below.

Thanks for your help!

class NNModel():

def __init__():

...

self.cuda()

def model(x_data, y_data):

# priors and lifted module

with pyro.plate('data', ...):

prediction_mean = lifted_module_sample(x_data).squeeze(-1)

pyro.sample('observations', LogNormal(prediction_mean, scale), obs=y_data)

guide = AutoDiagonalNormal(...)

def train(...):

batch_size = 512

for _ in n_iterations:

for x, y in train_dataloader:

x.cuda()

y.cuda()

svi.step(x, y)