Is it possible to estimate values from following model?

def generator(x):

# values to estimate

to_be_need = 3

to_remember = 4

preds = torch.zeros(len(x)).long()

for d in range(len(x)):

# priors

leads = dist.Uniform(100, 200).sample()

# likelihood

needs = dist.Poisson(to_be_need*torch.ones(int(leads))).sample()

memos = dist.Poisson(to_remember*torch.ones(int(leads))).sample()

deals = needs[needs >= memos]

deals = deals[deals < len(x) - d].unique(return_counts=True)

preds[deals[0].long() + d] += deals[1]

return preds

x = torch.arange(0, 100, 1)

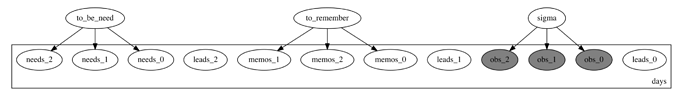

y = generator(x)I tried to build naive model, but render shows some problems with dependencies:

def model(x, y):

to_be_need = pyro.sample("to_be_need", dist.Uniform(1,7))

to_remember = pyro.sample("to_remember", dist.Uniform(1,7))

sigma = pyro.sample("sigma", dist.Uniform(0, 1))

preds = torch.zeros(len(x)).long()

for d in pyro.plate("days", len(x)):

leads = pyro.sample(f"leads_{d}", dist.Uniform(100, 200))

needs = pyro.sample(f"needs_{d}", dist.Poisson(to_be_need*torch.ones(int(leads))))

memos = pyro.sample(f"memos_{d}", dist.Poisson(to_remember*torch.ones(int(leads))))

deals = Vindex(needs)[needs >= memos]

deals = deals[deals < len(y) - d].unique(return_counts=True)

preds[deals[0].long() + d] += deals[1]

pyro.sample(f"obs_{d}", dist.Normal(preds[d], sigma), obs=y[d])pyro.render_model(model, model_args=(x, y))And inference cause an error:

mcmc = MCMC(NUTS(model), num_samples=1000, warmup_steps=200)

mcmc.run(x, y)ValueError: Continuous inference cannot handle discrete sample site 'needs_0'. Consider enumerating that variable as documented in https://pyro.ai/examples/enumeration.html . If you are already enumerating, take care to hide this site when constructing an autoguide, e.g. guide = AutoNormal(poutine.block(model, hide=['needs_0'])).Hint from the error advice to use enumerating, but I have no idea how to apply that