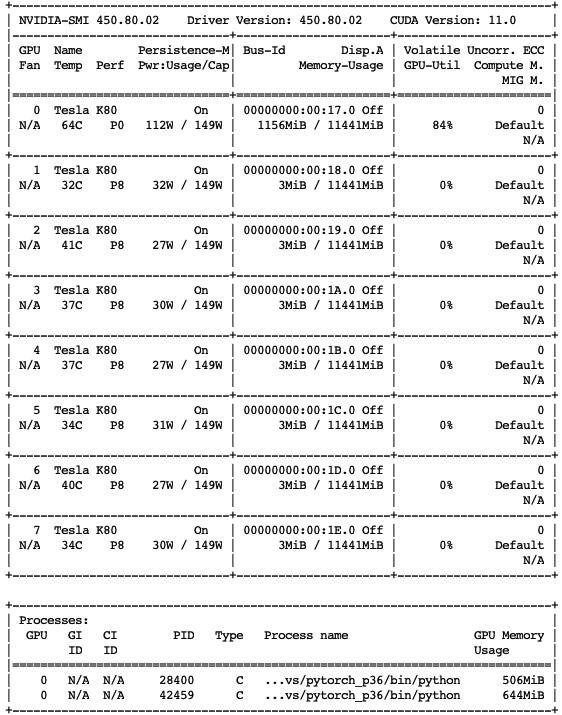

I have searched around this forum to finally get my model running on GPU, but I just realized it is only running on 1 GPU out of my total 8 ones. Is there any guide on how to enable all GPUs? Will the program run faster with all 8 GPUs? Here is my code:

import logging

import os

import torch

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import seaborn as sns

from torch.distributions import constraints

import pyro

import pyro.distributions as dist

import pyro.optim as optim

from scipy import stats

pyro.set_rng_seed(1)

RANDOM_SEED = 8927

np.random.seed(RANDOM_SEED)

# this default to create tensor on GPU, but only the first GPU in my case

torch.set_default_tensor_type('torch.cuda.FloatTensor')

# https://docs.pymc.io/notebooks/GLM-negative-binomial-regression.html

# Mean Poisson values

theta_noalcohol_meds = 1 # no alcohol, took an antihist

theta_alcohol_meds = 3 # alcohol, took an antihist

theta_noalcohol_nomeds = 6 # no alcohol, no antihist

theta_alcohol_nomeds = 36 # alcohol, no antihist

# Gamma shape parameter

alpha = 10

def get_nb_vals(mu, alpha, size):

"""Generate negative binomially distributed samples by

drawing a sample from a gamma distribution with mean `mu` and

shape parameter `alpha', then drawing from a Poisson

distribution whose rate parameter is given by the sampled

gamma variable.

"""

g = stats.gamma.rvs(alpha, scale=mu / alpha, size=size)

return stats.poisson.rvs(g)

# Create samples

n = 1000000

df = pd.DataFrame(

{

"nsneeze": np.concatenate(

(

get_nb_vals(theta_noalcohol_meds, alpha, n),

get_nb_vals(theta_alcohol_meds, alpha, n),

get_nb_vals(theta_noalcohol_nomeds, alpha, n),

get_nb_vals(theta_alcohol_nomeds, alpha, n),

)

),

"alcohol": np.concatenate(

(

np.repeat(False, n),

np.repeat(True, n),

np.repeat(False, n),

np.repeat(True, n),

)

),

"nomeds": np.concatenate(

(

np.repeat(False, n),

np.repeat(False, n),

np.repeat(True, n),

np.repeat(True, n),

)

),

}

)

def model(nsneeze, alcohol, nomeds, al_no):

b0 = pyro.sample("b0", dist.Normal(torch.Tensor([0.]).cuda(), torch.Tensor([10.]).cuda()))

b1 = pyro.sample("b1", dist.Normal(torch.Tensor([0.]).cuda(), torch.Tensor([10.]).cuda()))

b2 = pyro.sample("b2", dist.Normal(torch.Tensor([0.]).cuda(), torch.Tensor([10.]).cuda()))

b3 = pyro.sample("b3", dist.Normal(torch.Tensor([0.]).cuda(), torch.Tensor([10.]).cuda()))

phi = pyro.sample("phi", dist.HalfCauchy(torch.Tensor([2.5]).cuda()))

theta = b0 + b1 * alcohol + b2 * nomeds + b3 * al_no

mu = torch.exp(theta)

alpha = phi

beta = phi/mu

with pyro.plate("data", len(nsneeze), use_cuda=True):

pyro.sample("obs", dist.GammaPoisson(alpha, beta), obs=nsneeze)

train = torch.tensor(df.values, dtype=torch.float).cuda()

nsneeze, alcohol, nomeds, al_no = train[:, 0], train[:, 1], train[:, 2], train[:, 3]

%%time

from pyro.infer.autoguide import AutoMultivariateNormal, init_to_mean

from pyro.infer import SVI, Trace_ELBO

num_iters = 10000

guide = AutoMultivariateNormal(model, init_loc_fn=init_to_mean)

svi = SVI(model,

guide,

optim.Adam({"lr": .001}),

loss=Trace_ELBO())

pyro.clear_param_store()

loss = []

for i in range(num_iters):

elbo = svi.step(nsneeze, alcohol, nomeds, al_no)

loss.append(elbo)

if i % 500 == 0:

print("Elbo loss: {}".format(elbo))

As you can see, only 1 GPU is active: